Artificial Intelligence CEO issues caution: Chatbot technology might find application in legal proceedings

In the rapidly evolving world of Artificial Intelligence (AI), concerns about data privacy and the implications of AI conversations in legal disputes have become a significant issue. Sam Altman, CEO of OpenAI, has been at the forefront of these discussions, warning of potential risks and expressing the need for urgent action.

Recently, Altman has spoken with political decision-makers about the issue, highlighting the potential vulnerability of personal information shared with AI. He has expressed fear of using certain AI tools due to concerns about privacy, and has even compared intimate conversations with AI to therapist sessions, suggesting they could potentially be used as evidence in court.

AI, such as OpenAI's ChatGPT, seems to have answers to a wide variety of questions, making it an increasingly popular tool for many. However, it's important to note that AI does not have attorney-client privilege or doctor-patient confidentiality. This means that any information shared could potentially be used in legal or criminal contexts.

This is a concern that has resonated with many TikTok users, who have been using ChatGPT to discuss their daily problems. One user summed it up succinctly: "ChatGPT - I'm here to help. Also ChatGPT - Your Honor, here's everything." Another user warned, "You treat ChatGPT like your therapist and forget that it's also your snitch. Everything typed, gets stored. Welcome to the future."

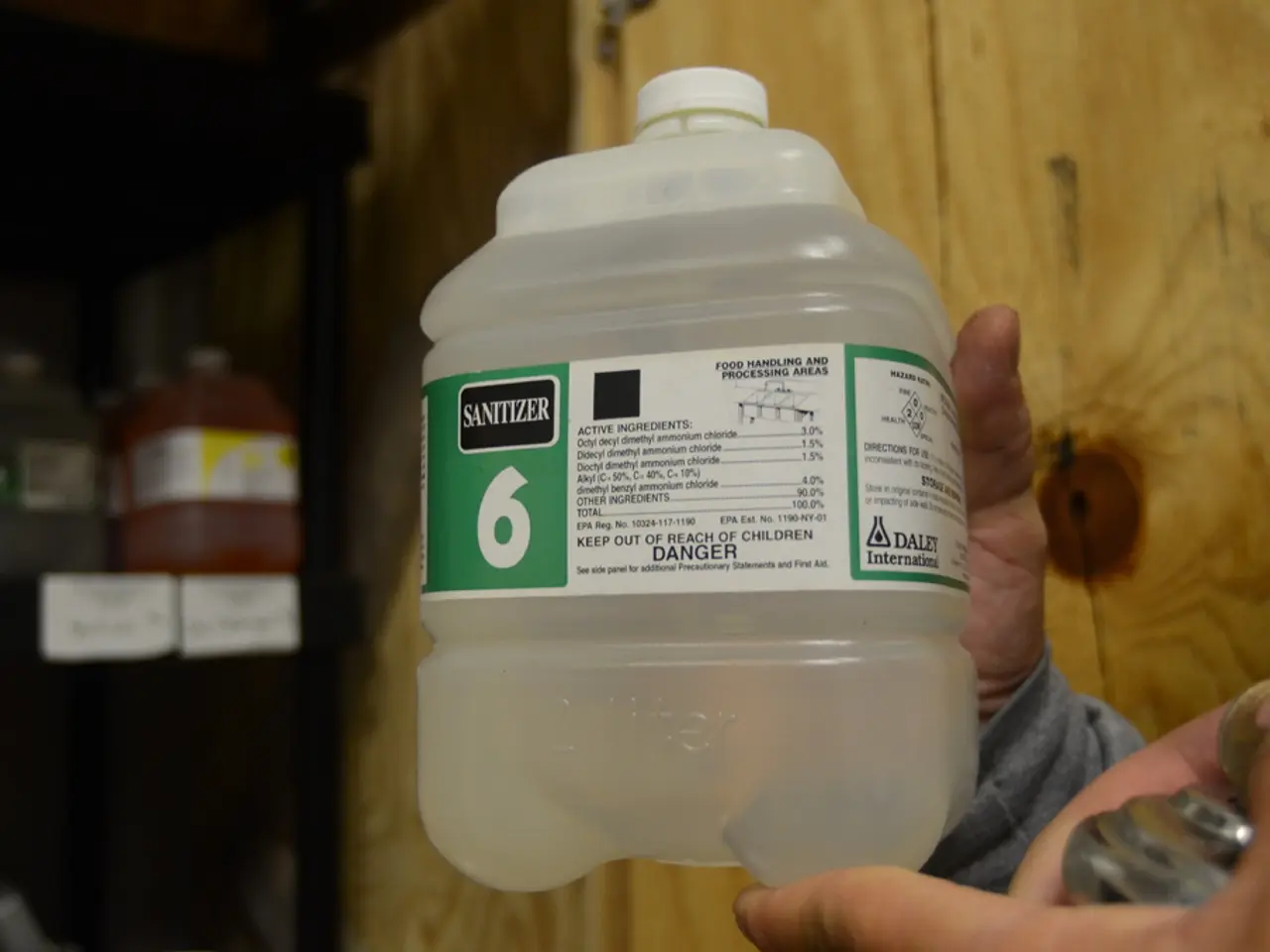

In the United States, AI-generated calls or communications, including those by chatbots, are regulated under laws like the Telephone Consumer Protection Act (TCPA). This implies that in legal or criminal contexts, any AI chatbot sharing personal information must ensure compliance with consent and privacy standards.

However, there is no comprehensive federal AI-specific regulation on chatbot use yet. States are actively introducing laws, such as California's AI Transparency Act, which will increase obligations around transparency in AI operations, including chatbots, from 2026 onwards. Other states are also introducing laws that balance innovation with safety and privacy, suggesting regulatory environments are becoming stricter about AI handling sensitive information.

In the EU, the General Data Protection Regulation (GDPR) regulates AI interactions as data processing activities, emphasizing user consent, data protection, and rights to privacy. Therefore, using AI chatbots in legal disputes or criminal cases must comply with GDPR’s strict data protection principles—particularly around sharing personal information, which is a special category of data.

Key regulatory requirements across jurisdictions include obtaining explicit consent from individuals before processing or sharing personal data, disclosing when a user is interacting with an AI, providing opt-out options or other user rights under privacy laws, complying with relevant telecommunications and consumer protection laws, and ensuring proper data security to protect personal information.

As laws continue to evolve, particularly at state and international levels, organizations using AI chatbots in these contexts should carefully review applicable laws, implement strict privacy and consent safeguards, and stay updated on new legislation. It's clear that the future of AI and its role in our daily lives, particularly in sensitive matters such as legal disputes, is an area that requires careful consideration and ongoing discussion.

[1] https://www.law.com/legaltechnews/2021/08/10/what-ai-chatbots-need-to-know-about-data-privacy/ [2] https://www.nytimes.com/2022/01/06/business/openai-chatbot-data-privacy.html [3] https://www.forbes.com/sites/adamhartung/2022/01/07/openai-ceo-says-he-is-scared-of-his-own-ai-tool-what-does-that-mean-for-the-future/?sh=76f9a6e371a8

- As the use of AI chatbots, such as OpenAI's ChatGPT, grows in popularity, concerns about data privacy have intensified, with discussions surrounding the potential misuse of personal information shared with AI in legal disputes.

- Sam Altman, CEO of OpenAI, has been vocal about these concerns, advocating for regulations that prioritize user privacy and transparency in AI interactions, particularly in the EU where the General Data Protection Regulation (GDPR) enforces strict data protection principles.

- To ensure compliance with data privacy laws globally, organizations utilizing AI chatbots in sensitive matters like legal disputes should implement stringent privacy and consent safeguards, regularly review applicable laws, and stay updated on emerging legislation.